Posted September 30th, 2010 by

rybolov

I’ve been waiting all of September for FedRAMP to be released and hoping they get over the last-minute hurdles to put something out into view. Our lolcats will feel much more secure now with a squishy buddy.

Similar Posts:

Posted in IKANHAZFIZMA |  1 Comment »

1 Comment »

Tags: cloud • cloudcomputing • government • infosec • infosharing • lolcats • management • scalability

Posted September 29th, 2010 by

rybolov

Ah yes, I’ve explained this about a hundred times this week (at that thing that I can’t blog about, but @McKeay @MikD and @Sawaba were there so fill in the gaps), thought I should get this down somewhere.

the 3 factors that determine how much money you will make (or lose) in a consulting practice:

- Bill Rate: how much do you charge your customers. This is pretty familiar to most folks.

- Utilization: what percentage of your employees’ time is spent being billable. The trick here is if you can get them to work 50 hours/week because then they’re at 125% utilization and suspiciously close to “uncompensated overtime”, a concept I’ll maybe explain in the future.

- Leverage: the ratio of bosses to worker bees. More experienced people are more expensive to have as employees. Usually a company loses money on these folks because the bill rate is less than what they are paid. Conversely, the biggest margin is on work done by junior folks. A highly leveraged ratio is 1:25, a lowly leveraged ratio is 1:5 or even less.

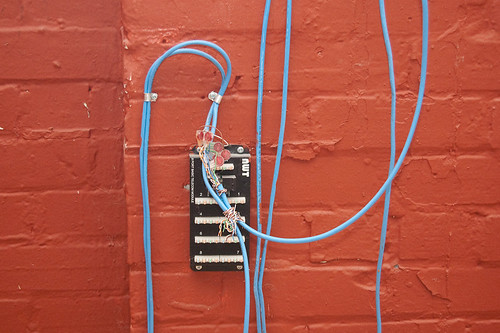

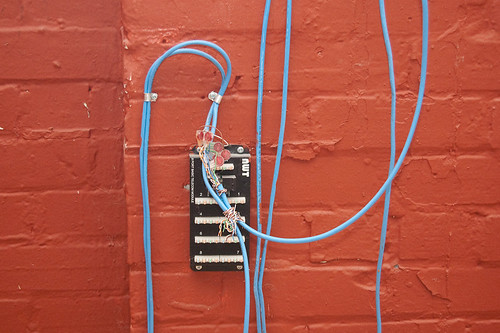

Site Assessment photo by punkin3.14.

And then we have the security assessments business and security consulting in general. Let’s face it, security assessments are a commodity market. What this means is that since most competitors in the assessment space charge the same amount (or at least relatively close to each other), this means some things about the profitability of an assessment engagement:

- Assuming a Firm Fixed Price for the engagement, the Effective Bill Rate is inversely proportionate to the amount of hours you spend on the project. IE, $30K/60 hours=$500/hour and 30K/240 hours = $125/hour. I know this is a shocker, but the less amount of time you spend on an assessment, the bigger your margin but you would also expect the quality to suffer.

- Highly leveraged engagements let you keep margin but over time the quality suffers. 1:25 is incredibly lousy for quality but awesome for profit. If you start looking at security assessment teams, they’re usually 1:4 or 1:5 which means that the assessment vendor is getting squeezed on margin.

- Keeping your people engaged as much as possible gives you that extra bit of margin. Of course, if they’re spending 100% of their time on the road, they’ll get burned out really quickly. This is not good for both staff longevity (and subsequent recruiting costs) and for work quality.

Now for the questions that this raises for me:

- Is there a 2-tier market where there are ninjas (expensive, high quality) and farmers (commodity prices, OK quality)?

- How do we keep audit/assessment quality up despite economic pressure? IE, how do we create the conditions where the ninja business model is viable?

- Are we putting too much trust in our auditors/assessors for what we can reasonably expect them to perform successfully?

- How can any information security framework focused solely on audit/assessment survive past 5 years? (5-10 years is the SWAG time on how long it takes a technology to go from “nobody’s done this before” to “we have a tool to automate most of it”)

- What’s the alternative?

Similar Posts:

Posted in Rants, What Doesn't Work |  3 Comments »

3 Comments »

Tags: accreditation • auditor • C&A • cashcows • certification • compliance • economics • fisma • government • infosec • management • moneymoneymoney • pci-dss • publicpolicy • security

Posted August 24th, 2010 by

rybolov

Ah yes, the magic of Google hacking and advanced operators. All the “infosec cool kids” have been having a blast this week using a combination of filetype and site operators to look for classification markings in documents. I figure that with the WikiLeaks brouhaha lately, it might be a good idea to write a “howto” for government organizations to check for web leaks.

Now for the search string:, “enter document marking here” site:agency.gov filetype:rtf | filetype:ppt | filetype:pptx | filetype:csv | filetype:xls | filetype:xlsx | filetype:docx | filetype:doc | filetype:pdf looks for typical document formats on the agency.gov website looking for a specific caveat. You could easily put in a key phrase used for marking sensitive documents in your agency. Obviously there will be results from published organizational policy describing how to mark documents, but there will also be other things that should be looked at.

Typical document markings, all you have to do is pick out key phrases from your agency policy that have the verbatim disclaimer to put on docs:

- “This document contains sensitive security information”

- “Disclosure is prohibited”

- “This document contains confidential information”

- “Not for release”

- “No part of this document may be released”

- “Unauthorized release may result in civil penalty or other action”

- Any one of a thousand other key words listed on Wikipedia

Other ideas:

- Use the “site:gov” operator to look for documents government-wide.

- Drop the “site” operator altogether and look for agency information that has been published on the web by third parties.

- Chain the markings together with an “or” for one long search string: “not for release” | “no part of this document may be released” site:gov filetype:rtf | filetype:ppt | filetype:pptx | filetype:csv | filetype:xls | filetype:xlsx | filetype:docx | filetype:doc | filetype:pdf

If you’re not doing this already, I recommend setting up a weekly/daily search looking for documents that have been indexed and follow up on them as an incident.

Similar Posts:

Posted in Hack the Planet, Technical, What Works |  2 Comments »

2 Comments »

Tags: datacentric • government • infosec • infosharing • management • privacy • pwnage

Posted August 17th, 2010 by

rybolov

For some reason, “Rebuilding C&A” has been a perennial traffic magnet for me for a year or so now. Seeing how that particular post was written in 2007, I find this an interesting stat. Maybe I hit all the SEO terms right. Or maybe the zeitgeist of the Information Assurance community is how to do it right. Anyway, if you’re in Government and information security, it might be worthwhile to check out this old nugget of wisdom from yesteryear.

Similar Posts:

Posted in FISMA, NIST, The Guerilla CISO |  No Comments »

No Comments »

Tags: 800-37 • 800-53 • accreditation • C&A • certification • comments • compliance • fisma • government • infosec • management • NIST • security

Posted August 13th, 2010 by

rybolov

Metricon 5 was this week, it was a blast you should have been there.

One of the things the program committee worked on was more of a practitioner focus. I think the whole event was a good mix between theory and application and the overall blend was really, really good. Talking to the speakers before the event was much awesome as I could give them feedback on their talk proposal and then see how that conversation led to an awe-inspiring presentation.

I brought a couple security manager folks I know along with me and their opinion was that the event was way awesome. If you’re one of my blog readers and didn’t hunt me down and say hi, then whatcha waitin’ for, drop me an email and we’ll chat.

You can go check out the slides and papers at the Security Metrics site.

My slides are below. I’m not sure if I was maybe a bit too far “out there” (I do that from time to time) but what I’m really looking for is a scorecard so that we can consciously build regulation and compliance frameworks instead of the way we’ve been doing it. This would help tremendously with public policy, industry self-regulation, and anybody who is trying to build their own framework.

Similar Posts:

Posted in Public Policy, Speaking |  1 Comment »

1 Comment »

Tags: catalogofcontrols • certification • compliance • government • infosec • infosharing • law • legislation • management • publicpolicy • security • speaking

Posted August 4th, 2010 by

rybolov

Now I’ve been reasonably impressed with GovInfoSecurity.com and Eric Chabrow’s articles but this one supporting 20 CSC doesn’t make sense to me. On one hand, you don’t have to treat your auditor’s word as gospel but on the other hand if we feed them what to say then suddenly it has merit?

Or is it just that all the security management frameworks suck and auditors remind us of that on a daily basis. =)

However, it seems that there are 3 ways that people approach frameworks:

- From the Top–starting at the organization mission and working down the stack through policy, procedures, and then technology. This is the approach taken by holistic frameworks like the NIST Risk Management Framework and ISO 27001/27002. I think that if we start solely from this angle, then we end up with a massive case of analysis paralysis and policy created in a vacuum that is about as effective as it might sound.

- From the Bottom–starting with technology, then building procedures and policy where you need to. This is the approach of the 20 Critical Security Controls. When we start with this, we go all crazy buying bling and in 6 months it all implodes because it’s just not sustainable–you have no way to justify additional money or staff to operate the gear.

- And Then There’s Reality–what I really need is both approaches at the same time and I need it done a year ago. *sigh*

Similar Posts:

Posted in FISMA, Rants |  3 Comments »

3 Comments »

Tags: 20csc • auditor • catalogofcontrols • fisma • government • infosec • management • security

1 Comment »

1 Comment » Posts RSS

Posts RSS